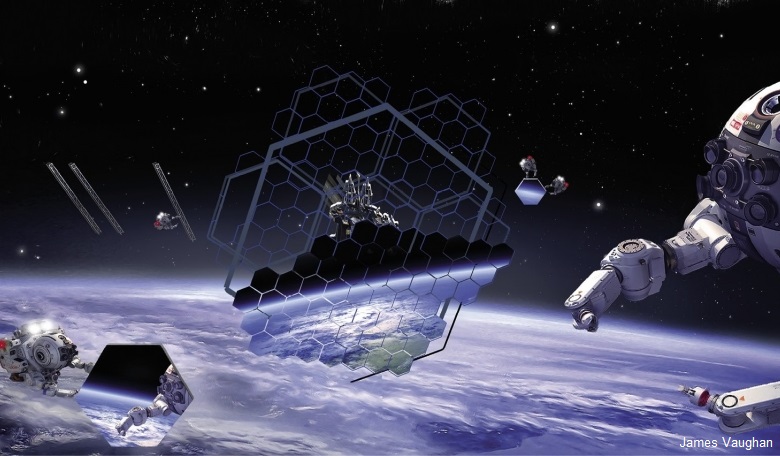

As robotic dexterity and computational capabilities have improved, autonomous systems for both automated missions and the assembly of human in-orbit infrastructure have become increasingly important. This trend promises to continue with the use of artificial intelligence and machine learning as so-called ‘collaborative technologies’ are integrated into a variety of mission concepts. Here, the authors provide a progress update based on research work conducted at the University of Surrey, UK.

Space science and human spaceflight were very much at the origin of the global space effort. They have not only been vital sources of inspiration and international cooperation but were also key to the technological advancement of space technologies. Today, the inherent challenges for humans in the space environment provide at least some of the inspiration for the concept of autonomous operation; put simply, if a system can make its own decisions, without human intervention, it can help to avoid placing human lives in danger.

In order to develop new solutions to support human-robot interaction in space, the University of Surrey collaborated with technology consultancy Intelcomm in a project funded by the UK’s SPace Research and Innovation Network for Technology (SPRINT) and supported by the FAIR-SPACE Hub, a SPRINT partner at the Surrey Space Centre. The University of Surrey is a well-known innovator in small satellite technologies, while Intelcomm UK has extensive experience in developing prototypes and fielding mission critical systems for the international communications and aerospace industries.

The research team joined the national SPRINT business support programme to focus on new Autonomous Decision Support System (ADSS) technology to develop new solutions to support human-robot interaction in space. ADSS technology has the potential to greatly reduce an astronaut’s dependency on Earth-based mission control and return control to the astronaut to enable a full oversight of robotic operating systems. This will help astronauts to manage difficult or potentially dangerous failure scenarios in a timely manner and avoid escalation, ensuring their own safety and the safety of the robotic or autonomous system.

ADSS technology has the potential to greatly reduce an astronaut’s dependency on Earthbased mission control

This line of research originated from work done by the US government in providing people with vast amounts of information that could be assimilated in real-time. NASA and the European Space Agency (ESA) are, of course, keenly focused on ensuring astronaut safety, so the opportunities to look at ADSS technology for space applications quickly became apparent.

Project aims

The general aim of the ADSS project was to develop a system that used multiple ‘intelligent sensors’ to feed a Machine Learning (ML)-based core system, which continuously examined measurement data and compared it with the manufacturer’s historic or ‘longevity data’ to allow fault prediction. This involved adapting a ‘predictive maintenance’ algorithm from a Connected and Autonomous Vehicle (CAV) and integrating it into a space robotic system. University expertise and facilities were then utilised to prove the concept for an enhanced human-machine (autonomous/remote rover) interface and develop an ADSS for space situational awareness in a human-robot interaction scenario. A patent is pending for this new predictive maintenance algorithm.

A specific aim of the ADSS project was to develop the application of Extended Reality (XR) technology for use in spacecraft and other related environments; the idea being that ADSS can monitor, detect and identify spacecraft malfunctions in real-time and enable just-in-time human intervention for repairs. The challenge of the SPRINT project was to use 3D CAD modelling to overlay what is visualised in real-time, using machine learning and AI techniques for the predictive algorithm.

The intention is that, using a head-mounted display, an astronaut on board a space station, or a spacecraft in deep space, will be able to see the virtual representations of models or component parts overlaid on already-assembled sections of spacecraft; this would allow them to identify, locate and receive information about the malfunction and instructions for repair. Such a system provides for safer space exploration by enabling crews to remain fully aware of the health of an identified spacecraft system at all times, mainly because it makes data-enabled decisions in real-time and increases the level of autonomy of the robotic platform.

Visualisation of a situation is one of the most intuitive and effective methods of assimilating dynamically changing information. Thus, the project aimed to develop a multi-dimensional, real-time, computer-generated schematic model that is constantly updated in an XR environment, using wireless sensor arrays for fault detection with the help of a vehicle health management system.

Implementation of this type of monitoring system will not only enable a crew to have oversight of the health of the system but will also enable real-time decision-making that results in repairs without Earth team support.

XR is an umbrella term for all the immersive technologies AR), virtual reality (VR), and mixed reality (MR) plus those that are still to be created. XR is rapidly making its way into industries, including the space industry, and is likely to have a huge impact on how we see ‘work’.

XR is an umbrella term for all the immersive technologies AR), virtual reality (VR), and mixed reality (MR) plus those that are still to be created. XR is rapidly making its way into industries, including the space industry, and is likely to have a huge impact on how we see ‘work’.

Fault detection technology

Visualisation of a situation is one of the most intuitive and effective methods of assimilating dynamically changing information

Clearly, the business of transferring information is important, not just pooling information into one place but allowing for distribution among different users with different requirements, while also remaining mindful of what the information is being used for. Due to the widespread use of intelligent sensors across all the different onboard systems, it will be possible to proactively scan a complete spacecraft to identify, and hopefully correct, potential problems before they escalate.

In addition to the manufacturer’s longevity data, there are likely to be situations where small variations in one particular element that is being monitored may not, in itself, be outside of the pre-set limits. However, when seen in conjunction with small changes in other elements, it may constitute the precursor of a forthcoming fault. Under these circumstances it would be possible for the pre-emptive fault-predictive software to send an alert.

To determine if small variations detected in a sequence of sensors are likely to escalate into an imminent event, some level of pre-knowledge of the overall system has to be established. In the initial set-up phase, suitably experienced engineers will train the predictive ML software with as many fault sequence scenarios as possible. Then, a series of actual faults will be induced to ensure that the software is able to identify the percentage likelihood of an event occurring.

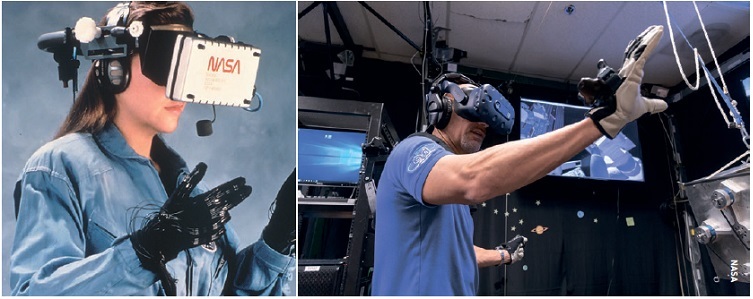

Head-mounted displays. Left: 1985, NASA’s Virtual Interface Environment Workstation (VIEW), allowed the user to grasp virtual objects and issue commands by gesture in a simplistic virtual environment to operate remotely controlled robotic devices. Right: 2019, ESA astronaut Luca Parmitano navigates through a computer-generated environment to learn the route he might take outside the Space Station on a spacewalk, helping him to take decisions and act more quickly during the actual spacewalk.

Head-mounted displays. Left: 1985, NASA’s Virtual Interface Environment Workstation (VIEW), allowed the user to grasp virtual objects and issue commands by gesture in a simplistic virtual environment to operate remotely controlled robotic devices. Right: 2019, ESA astronaut Luca Parmitano navigates through a computer-generated environment to learn the route he might take outside the Space Station on a spacewalk, helping him to take decisions and act more quickly during the actual spacewalk.

As more actual faults are introduced, the ML software will become increasingly able to determine not just the cause of the fault but, of much greater importance, what effect it was able to detect on other associated sensors. This then becomes the prime basis for setting and then potentially re-setting all the intelligent sensors. Of course, while it is important to generate a fault alert as early as possible, it is equally important not to trigger excessive ‘false alarms’ which risk de-sensitising users to real issues.

By combining this technology with a man/machine interface, which is fundamental in the way the such a system is put together, we can create ‘situational awareness’. The introduction of graphical information through head-mounted displays was explored because of an acute awareness of the dangers of overloading users with information that is not intuitive. Most importantly, the head-up display avoids the need for the user to sit in front of a keyboard or screen.

Although astronauts in space are the primary target for the SPRINT project, other industries have also shown interest in the technology.

Although astronauts in space are the primary target for the SPRINT project, other industries have also shown interest in the technology.

Further work

The new Internet of Things (IoT) technology allows us to import and convey large amounts of information in an intuitive way. Although some of this technology is already in place, the integration of real-time information is relatively new and the SPRINT project has allowed us to bring all of these technologies together.

Having the right technology could be the difference between life and death

Although astronauts in space are the primary target for this particular development, other industries have also shown interest in the technology. Following the SPRINT project, the team is working on a revolutionary immersive extended reality (XR) model and will continue with applications for other sectors such as automotive and healthcare.

We intend to be first to market with a technology that enables high risk industries with routine human-machine interface (HMI) requirements to improve safety and operational effectiveness by linking live sensor data with real-time XR tools. Implementation of monitoring the wireless intelligent sensors will not only enable the users to have an oversight of the health of the system, but will also enable real-time decision-making to identify the fault in the system and to carry out just-in-time repairs.

Given the current collaboration of experts in the various areas, the team is in an excellent position to move closer to the ‘holy grail’ of a comfortable head-mounted display that makes the user aware of everything around them and able to react to an issue before it escalates to become a critical situation. In such situations, having the right technology could be the difference between life and death.

ADSS technology will enable the crew to remain fully aware of the health of an identified spacecraft system at all times, making data-enabled decisions in real-time and increasing the level of autonomy of the robotic platform.

ADSS technology will enable the crew to remain fully aware of the health of an identified spacecraft system at all times, making data-enabled decisions in real-time and increasing the level of autonomy of the robotic platform.

Acknowledgement

Special thanks to Dr Ashith Babu for his contribution to the ADSS project.

About the authors

Saber Fallah is a Senior Lecturer (Associate Professor) at the University of Surrey and is the director of Connected and Autonomous Vehicles Lab (CAV-Lab) in the department of Mechanical Engineering Sciences. Dr Fallah’s research has contributed to the state-of-the-art research in the areas of connected autonomous vehicles and advanced driver assistance systems and his research has so far produced four patents. He is also co-author of a textbook entitled Electric and Hybrid Vehicles: Technologies, Modeling and Control [John Wiley 2014].

Tatiana Farcas is a programme manager and AR/VR Research Assistant for the ADSS project at the University of Surrey’s CAV-Lab. She is also the CEO of ADSS Technology Ltd, a spin-out company from the ADSS project funded by SPRINT, Fair Space Hub, University of Surrey and Intelcomm UK Ltd. Farcas has a MEc in finance, banking and international affairs and an MSc in Sustainability Building Conservation.