Artificial Intelligence (AI) is rapidly transforming the space frontier, powering autonomous satellites, deep space exploration and data analysis. However, this revolution brings a critical paradox, because the same AI systems also introduce sophisticated, often unseen, cybersecurity vulnerabilities that could jeopardise multibillion-dollar assets and critical global infrastructure. This article dissects these emerging challenges and charts a course for developing resilient security frameworks and international cooperation to safeguard our AI-driven future in space.

The operational tempo and complexity of modern space missions increasingly mandate the integration of Artificial Intelligence. From autonomous spacecraft guidance, navigation and control (GNC) and in-orbit servicing to the real-time processing of vast Earth observation (EO) data streams and predictive analytics for satellite health, AI is no longer a theoretical adjunct but a core enabling technology.

As AI systems become the brains of next- generation space missions, we must confront a new spectrum of cyber threats ranging from sophisticated adversarial attacks to compromised data integrity, all amplified by the unique, unforgiving and increasingly contested environment beyond Earth. Our reliance on space-based assets for global communications, precision navigation and timing (PNT), strategic intelligence and significant economic activity has long established cybersecurity as a non-negotiable imperative. The integrity and availability of these systems are intrinsically linked to national security and international stability, making their protection a paramount concern for state and commercial actors alike.

The vulnerability of space-based systems is characterised by physical remoteness, inherent signal delay, radiation exposure and the increasingly contested geopolitical landscape. Consequently, a proactive and comprehensive approach encompassing robust mitigation strategies and adaptive governance frameworks is urgently required. This analysis will examine the primary AI-driven cybersecurity challenges confronting the space sector.

AI’s expanding role

As AI systems become the brains of next-generation space missions, we must confront a new spectrum of cyber threats ranging from sophisticated adversarial attacks to compromised data integrity

Artificial Intelligence is rapidly transitioning from a niche research area to a fundamental component across the entire space mission lifecycle. Its integration is driven by the tangible benefits of enhanced operational efficiency, expanded mission capabilities and the necessity for greater autonomy, particularly in deep space and complex orbital environments.

For example, onboard data processing and autonomous decision-making is a key application that demonstrates AI’s expanding footprint. Satellites are increasingly equipped with AI algorithms for real-time data triage and intelligent payload management. A notable example is the European Space Agency’s Phi-sat-1, which utilised onboard AI to filter cloud-obscured EO imagery, significantly reducing downlink bandwidth requirements and improving data utility. This trend extends to autonomous fault detection, isolation and recovery (FDIR) systems, which enable spacecraft to respond to anomalies without ground intervention.

Another critical area is autonomous navigation, rendezvous and docking (AR&D). AI-powered computer vision and sensor fusion are critical for enabling complex AR&D manoeuvres for in-orbit servicing, assembly and manufacturing (ISAM) and debris removal. These systems allow for precise relative navigation and control in dynamic, uncooperative environments where human-in-the-loop control is impractical due to signal delay or operational complexity.

AI tools are also employed for mission planning and optimisation. They optimise complex mission parameters, from launch trajectory design and constellation deployment strategies to resource allocation for multi-satellite operations and scientific observation scheduling. Machine learning models can analyse vast historical and simulated datasets to identify optimal solutions far exceeding human analytical capacity.

Furthermore, AI algorithms contribute significantly to predictive maintenance by analysing telemetry data from satellites and ground support equipment to predict component failures and system degradation. This proactive approach to maintenance minimises downtime, extends operational lifespans and optimises resource allocation for ground support, thereby reducing overall mission costs.

Fundamentally, the exponential growth in data generated by Earth observation, space situational awareness (SSA) and deep space exploration missions necessitates AI for large-scale data analysis. AI techniques are vital for tasks such as automated object detection and classification in EO imagery, anomaly detection in SSA data for identifying potential threats or conjunctions, and scientific discovery in astrophysical datasets.

The overarching impetus for this widespread adoption of AI is the pursuit of more resilient, capable and cost-effective space operations. AI facilitates a shift towards more autonomous systems (capable of operating with reduced ground dependency), which is crucial for missions beyond Earth orbit and for managing increasingly large and complex satellite constellations.

Cybersecurity challenges

AI techniques are vital for tasks such as automated object detection and classification in EO imagery, anomaly detection in SSA data for identifying potential threats or conjunctions, and scientific discovery in astrophysical datasets

The integration of AI into space systems, while offering significant advantages, concurrently introduces a distinct class of cybersecurity vulnerabilities that differ substantially from traditional IT threats. These challenges stem from the inherent characteristics of AI models, reporttheir training processes and the infrastructure supporting their deployment.

For example, Adversarial Machine Learning (AML) represents a potent threat category in which attackers deliberately manipulate AI model inputs or training processes to induce erroneous or malicious behaviour. For space systems reliant on AI for critical functions, the implications are severe.

In parallel, so-called evasion attacks involve crafting subtle, often imperceptible perturbations to input data (e.g. sensor readings, imagery and communication signals) that cause a well-trained AI model to misclassify or misinterpret information. In a space context, an evasion attack could involve an adversary transmitting carefully constructed radio frequency signals designed to mimic valid telecommands but with malicious intent, potentially tricking an AI-driven command validation system.

Similarly, AI-based image recognition systems used for satellite navigation or threat detection could be fooled by manipulated visual inputs, leading to incorrect manoeuvring or failure to identify hostile objects. The use of AI-generated ‘deepfakes’ for disinformation campaigns, potentially targeting ground control or public perception of space activities, also falls under this threat vector, impacting trust and decision-making.

Another category is data poisoning, which targets the AI model’s training phase. By surreptitiously injecting corrupted, mislabelled or maliciously crafted data into the training dataset an adversary can compromise the model’s integrity from its inception. The poisoned model may then exhibit specific, pre-programmed vulnerabilities or biases when deployed, or it might perform certain tasks incorrectly. For space systems, this could occur during the ground-based training of AI for Earth observation (e.g. introducing false positives for specific targets) or for autonomous GNC systems, leading to unreliable or dangerous operational behaviour once deployed in orbit. The distributed nature of some data collection and training pipelines can exacerbate this risk.

Sophisticated adversaries may also attempt to illicitly replicate or reverse-engineer proprietary AI models deployed on space assets or within ground segments. Through carefully designed queries to the model (black-box attacks) or by gaining access to model parameters (white-box attacks), an attacker can create a functionally equivalent substitute. This not only represents intellectual property theft but also allows the adversary to analyse the stolen model for vulnerabilities, develop tailored evasion attacks or repurpose the model for their own objectives.

Finally, there is AI-generated malware. Emerging research indicates the potential for AI itself to be used in the creation of highly adaptive and evasive malware. Such malware could be designed to specifically target vulnerabilities within AI software or to dynamically alter its behaviour to avoid detection by conventional cybersecurity defences. For space systems, this could manifest as sophisticated implants that disrupt AI-driven processes or could exfiltrate sensitive data processed by onboard AI.

Vulnerabilities in AI infrastructure and supply chain

AI-based image recognition systems used for satellite navigation or threat detection could be fooled by manipulated visual inputs, leading to incorrect manoeuvring or failure to identify hostile objects

The security of AI systems is not solely dependent on the robustness of the algorithms themselves but extends to the entire lifecycle infrastructure, from development and training to deployment and ongoing maintenance. The space environment introduces unique complexities to securing this pipeline.The end-to-end process of creating and deploying AI for space missions involves numerous stages: data collection and curation, model design, training on specialised hardware (often GPUs/TPUs), validation, packaging and deployment to target platforms (ground stations or spacecraft). Each stage presents potential attack vectors. For instance, compromised development tools, insecure data storage or vulnerabilities in the software frameworks used for model training can lead to the insertion of ‘backdoors’ or the manipulation of model behaviour. Securing this pipeline for remote space assets, where physical access for verification is impossible, is particularly challenging.

AI models, like any software, may contain vulnerabilities discovered post-deployment or require updates to improve performance or adapt to new data. However, patching AI systems on operational satellites is significantly more complex than for terrestrial IT. Limited uplink bandwidth, constrained onboard processing power and memory, the risk of a failed update and the long operational lifespans of space assets (often more than 15 years) make timely and secure updates a formidable challenge. This can leave known vulnerabilities unaddressed for extended periods.

Moreover, many advanced AI models, particularly deep learning networks, operate as ‘black boxes’, and their internal decision-making processes can be opaque, even to their developers, making it difficult to fully understand why a particular output was generated. This lack of transparency hinders comprehensive security auditing, vulnerability assessment and post-incident forensic analysis. Without clear explainability, verifying that a model is behaving as intended and has not been subtly compromised becomes exceptionally difficult, eroding trust in AI-driven critical functions.

The efficacy and safety of AI systems are fundamentally dependent on the trustworthiness of the data they process. In the space domain, where AI models ingest and act upon vast streams of sensor data, telemetry and command inputs, ensuring data integrity and confidentiality is paramount.

The AI features in Sierra Space’s Dream Chaser will grant the spacecraft the ability to autonomously navigate to and dock with the International Space Station, while dynamically adapting to changing conditions in space. This adaptability extends to monitoring and tracking through real-time data analysis. For AI systems making critical real-time decisions, the assurance that input data is genuine and unaltered is non-negotiable.

The AI features in Sierra Space’s Dream Chaser will grant the spacecraft the ability to autonomously navigate to and dock with the International Space Station, while dynamically adapting to changing conditions in space. This adaptability extends to monitoring and tracking through real-time data analysis. For AI systems making critical real-time decisions, the assurance that input data is genuine and unaltered is non-negotiable.

AI systems in space systems, whether onboard or in ground segments, process data that can be highly sensitive (e.g. intelligence, surveillance and reconnaissance (ISR) data, proprietary scientific findings or critical spacecraft telemetry). Unauthorised modification (tampering) of this data before or during AI processing can lead to flawed analyses, incorrect decisions and even catastrophic mission failures. For instance, manipulated GPS signals fed into an AI navigation system could cause a spacecraft to deviate from its intended trajectory. Similarly, exfiltration of sensitive data processed or generated by AI systems poses significant risks to national security, commercial competitiveness and mission objectives.

Securing these data pipelines against both internal and external threats across complex, often distributed, space architectures is a critical challenge, as is ensuring that AI decisions are based on authentic, uncorrupted data feeds. AI models are only as reliable as the data they are fed. If the input data is compromised, for example, through malicious attack, sensor malfunction or unintentional corruption, the AI’s output and subsequent actions will be inherently flawed. This necessitates robust mechanisms for data validation, authentication and provenance tracking throughout the data lifecycle.

For AI systems making critical real-time decisions, such as collision avoidance, autonomous docking or target engagement, the assurance that input data is genuine and unaltered is non-negotiable. The challenge lies in implementing these verification measures efficiently within the resource constraints of space-based platforms, most notably bandwidth and processing power.

Security of autonomous space systems

The efficacy and safety of AI systems are fundamentally dependent on the trustworthiness of the data they process

As AI grants space systems increasing levels of autonomy, the potential impact of a cybersecurity compromise that cedes control of these physical assets escalates dramatically.

When AI directly controls spacecraft manoeuvring, robotic manipulators or other kinetic functions, a successful cyberattack could lead to unauthorised control or hijacking. This could result in intentional collisions with other space objects (creating debris or damaging critical assets), deviation from mission objectives, denial of service, or even the weaponisation of a compromised space asset.

The ability of an AI to make rapid, independent decisions means that a compromised autonomous system could execute damaging actions before ground control can intervene, especially given communication delays. While autonomy is a key driver for AI adoption, maintaining appropriate levels of human oversight and implementing robust fail-safe mechanisms are critical for mitigating the risks of AI cyber failures. This includes designing systems with clearly defined operational boundaries for AI, independent monitoring systems to detect anomalous AI behaviour and reliable methods for human intervention or override, even under challenging communication conditions.

The design of these human-in-the-loop or human-in-command architectures for highly autonomous systems requires careful consideration of cognitive load, decision timelines and trust in AI.

The trend towards large, coordinated satellite constellations for communications and Earth observation often involves AI for distributed decision-making and collaborative behaviour among multiple spacecraft. Securing these multi-agent systems introduces new complexities in that a compromise in one node could potentially propagate to others, or coordinated attacks could target the inter-satellite communication links and distributed AI algorithms that govern constellation behaviour. Verifying the collective security and resilience of such swarms or formations against sophisticated cyber threats is an emerging research and engineering challenge.

Also in the regulatory sphere, the rapid pace of AI development and its application in space has outstripped the establishment of comprehensive governance frameworks, creating significant uncertainty and potential inconsistencies in security practices. Currently, there is also a notable absence of globally accepted technical standards specifically addressing the safety, security and ethical deployment of AI in space systems. While general cybersecurity standards exist, they often do not adequately cover the unique attack surfaces and failure modes associated with AI (e.g. adversarial attacks and data poisoning).

Many space technologies, including AI applications for Earth observation, signals intelligence and autonomous manoeuvring, possess inherent dual-use (civil and military) capabilities, which makes AI-enabled space systems attractive targets for state-sponsored actors seeking intelligence advantages or counter-space capabilities.

Many space technologies, including AI applications for Earth observation, signals intelligence and autonomous manoeuvring, possess inherent dual-use (civil and military) capabilities, which makes AI-enabled space systems attractive targets for state-sponsored actors seeking intelligence advantages or counter-space capabilities.

This lack of clear benchmarks makes it difficult for operators and manufacturers to design, verify and certify AI systems for space use to a common, agreed-upon level of assurance.

Without established standards, defining concrete, verifiable security requirements for AI in space procurement and operations becomes challenging. This ambiguity can lead to inconsistent security postures across different missions and organisations, potentially creating weak links in the increasingly interconnected space ecosystem.

Furthermore, the absence of clear regulatory oversight for AI security in space complicates efforts to enforce best practices, assign liability in the event of AI-induced incidents and foster international cooperation on managing shared risks. Addressing these governance gaps is crucial for building trust and ensuring the responsible and secure use of AI in space.

Amplifying factors

When AI directly controls spacecraft manoeuvring, robotic manipulators or other kinetic functions, a successful cyberattack could lead to unauthorised control or hijacking

The inherent cybersecurity challenges associated with AI are significantly magnified by the unique and unforgiving characteristics of the space domain. These environmental and contextual factors create additional layers of complexity for securing AI-enabled space systems.

Spacecraft operate in an environment characterised by extreme temperature fluctuations, vacuum conditions and persistent exposure to ionising radiation (e.g. galactic cosmic rays and solar particle events). This radiation can induce single-event upsets (SEUs), single-event latch-ups (SELs) and total ionising dose effects in electronic components, including the specialised processors increasingly used for onboard AI.

Such effects can lead to data corruption, erroneous computations or even permanent hardware failure in AI systems, potentially creating exploitable vulnerabilities or mimicking cyberattack symptoms, thereby complicating diagnostics and response. Shielding and radiation-hardening add cost and complexity, and residual risks always remain.

Communication with space assets, particularly those in geostationary orbit (GEO) or deep space, is subject to significant signal propagation delays (latency) and constrained data transmission rates (bandwidth). This severely impacts the ability to perform real-time monitoring of AI systems, conduct timely forensic analysis of anomalous behaviour or rapidly deploy patches and updates in response to newly discovered vulnerabilities.

The inability to intervene swiftly can provide adversaries with a wider window of opportunity to exploit AI vulnerabilities or escalate an attack.

Unlike terrestrial IT systems, physical access to operational space assets for repair, upgrade or direct forensic investigation is prohibitively expensive and, in most cases, impossible. Once a satellite is launched, its hardware configuration is largely fixed. This places an enormous premium on pre-launch security verification and necessitates robust remote management and recovery capabilities. Any AI vulnerabilities that require physical remediation or cannot be addressed via software updates may persist for the lifetime of the mission or render the system unusable.

After recognising space as a strategic domain in the 2022 European Council Strategic Compass, the European Commission and the High Representative developed the EU Space Strategy for Security and Defence. Its goals are: to enhance the resilience and protection of space systems and services in the union by developing EU space law focusing on safety, resilience and sustainability; setting up an EU Space Information Sharing and Analysis Centre to raise awareness and facilitate exchange of best practices on resilience measures for space capabilities; develop technologies and capabilities to increase resilience.

After recognising space as a strategic domain in the 2022 European Council Strategic Compass, the European Commission and the High Representative developed the EU Space Strategy for Security and Defence. Its goals are: to enhance the resilience and protection of space systems and services in the union by developing EU space law focusing on safety, resilience and sustainability; setting up an EU Space Information Sharing and Analysis Centre to raise awareness and facilitate exchange of best practices on resilience measures for space capabilities; develop technologies and capabilities to increase resilience.

Many space technologies, including AI applications for Earth observation, signals intelligence and autonomous manoeuvring, possess inherent dual-use (civil and military) capabilities. This makes AI-enabled space systems attractive targets for state-sponsored actors seeking intelligence advantages or counter-space capabilities. Attacks on such systems, or even perceived vulnerabilities, can quickly escalate geopolitical tensions due to the strategic importance of space assets and the difficulty in definitively attributing attacks in the space domain.

Moreover, the space domain is increasingly characterised as congested, contested and competitive. The proliferation of satellites from a growing number of state and commercial actors heightens the risk of unintentional interference and physical collisions.

AI systems designed for autonomous navigation and collision avoidance are critical in this environment, but a compromised or malfunctioning AI could exacerbate these risks, for example, by executing an incorrect avoidance manoeuvre. Furthermore, the crowded environment complicates threat attribution, such as distinguishing between a deliberate attack, an accidental malfunction or an environmental effect on an AI system, and can be exceptionally challenging, potentially leading to miscalculation and escalation.

Potential consequences

In the regulatory sphere, the rapid pace of AI development and its application in space has outstripped the establish-ment of comprehensive governance frameworks

A successful cyberattack targeting AI systems within the space ecosystem, or a significant AI malfunction due to inherent vulnerabilities, can precipitate a spectrum of severe consequences. These impacts extend beyond individual missions, potentially affecting global infrastructure, economic stability and international security.

The most direct consequence is the partial or total inability of a space asset or system to perform its intended functions. This could range from reduced data collection quality from an AI-enhanced sensor to the inability of an autonomous rover to complete its scientific objectives, or the complete loss of a satellite if its AI-driven GNC system is critically compromised. For high-value, long-duration missions, such failures represent substantial financial and scientific losses.

Failures can also lead to the loss or compromise of sensitive data. AI systems often process, analyse and store highly sensitive information, including classified military intelligence, proprietary commercial data and unique scientific datasets. A cyber breach could lead to the exfiltration of this data by adversaries, its illicit modification to mislead decision-makers, or its permanent destruction. The compromise of cryptographic keys managed or utilised by AI systems could further widen the scope of data exposure.

There is also the severe risk of physical damage to space assets or the creation of space debris. If an AI system controlling kinetic functions (e.g. propulsion, robotic arms or docking mechanisms) is compromised, it could be commanded to perform actions resulting in physical damage to its own platform or other space objects. This includes intentional or unintentional collisions, leading to the creation of orbital debris. Such debris poses a long-term threat to all operational satellites, further congesting critical orbits and increasing the risk of a cascading Kessler-syndrome-like event.

Phi-sat-1 was the first experiment to demonstrate how onboard artificial intelligence can improve the efficiency of sending Earth observation data back to Earth. This revolutionary artificial intelligence technology flew on one of the two CubeSats that make up the FSSCat mission.

Phi-sat-1 was the first experiment to demonstrate how onboard artificial intelligence can improve the efficiency of sending Earth observation data back to Earth. This revolutionary artificial intelligence technology flew on one of the two CubeSats that make up the FSSCat mission.

Furthermore, AI cyber failures could result in the loss of control over critical infrastructure. Many vital terrestrial infrastructures rely on space-based services, particularly for PNT signals (e.g. GPS, Galileo, GLONASS, BeiDou) and global communications. AI is increasingly being integrated into the management and security of these constellations. A successful cyberattack on the AI components of these systems could lead to widespread disruption of PNT services, impacting transportation, finance, energy grids and emergency services, or the denial/manipulation of critical communication relays.

High-profile AI failures or security breaches in space systems can significantly undermine confidence, leading to an erosion of trust in AI-powered space capabilities. This can slow down the adoption of beneficial AI technologies, increase regulatory scrutiny and create public apprehension, impacting investment and support for future space endeavours that depend on AI.

Finally, a critical consequence is the potential for misattribution and escalation of conflicts. Given the remote and often opaque nature of space operations, definitively attributing an AI system failure or anomalous behaviour to a malicious cyberattack, as opposed to a software bug, hardware malfunction, or even an unknown environmental effect, is exceptionally difficult. Misattribution of an incident, particularly in a tense geopolitical climate, could lead to incorrect retaliatory responses, diplomatic crises or even the escalation of hostilities between space-faring nations. The speed at which AI operates can also compress decision-making timelines, increasing the risk of rapid, ill-informed escalation.

Towards mitigation and future resilience

High-profile AI failures or security breaches in space systems can significantly undermine confidence, leading to an erosion of trust in AI-powered space capabilities

Addressing the multifaceted cybersecurity challenges posed by AI in space necessitates a proactive, multi-layered strategy encompassing technological innovation, rigorous development practices, adaptive security architectures and robust governance. The goal is to build inherent resilience into AI-enabled space systems from their inception.Traditional software testing, verification & validation (TV&V) processes are often insufficient for AI systems due to their probabilistic nature and potential for emergent behaviours. The space sector requires specialised TV&V frameworks that can rigorously assess AI model robustness against adversarial attacks, evaluate data poisoning resilience, quantify model uncertainty and validate performance under nominal and off-nominal space environmental conditions. This includes developing standardised benchmarks and simulation environments tailored to space mission profiles.Integrating security throughout the AI development lifecycle is critical. It involves secure coding practices for AI algorithms, vulnerability scanning of AI libraries and frameworks, secure management of training data and model artefacts, continuous integration/continuous deployment (CI/CD) pipelines with embedded security checks and provenance tracking for data and models to ensure integrity and traceability.

The black box nature of many AI models hinders trust and complicates security auditing, so investing in and deploying Explainable AI (XAI) techniques is crucial for increasing the transparency and interpretability of AI decision-making processes in space systems. XAI can help operators understand why an AI made a particular decision, detect anomalous or biased behaviour and facilitate more effective forensic post-incident analysis, thereby improving accountability and the ability to identify subtle compromises.

Beyond traditional intrusion detection systems, space systems require advanced anomaly detection capabilities specifically designed to monitor the behaviour of AI models and their outputs. These systems should leverage machine learning to establish baselines of normal AI operation and detect subtle deviations that might indicate an ongoing attack, a compromised model or an AI system operating outside its intended parameters due to environmental factors or unforeseen data inputs.

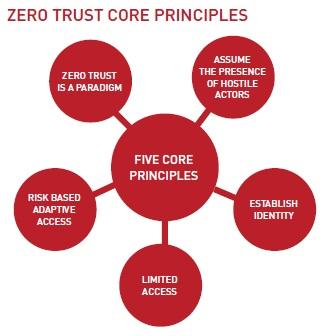

Adopting Zero Trust Architecture (ZTA) principles, which assume no implicit trust and continuously verify every user, device, application and data flow, is essential for securing complex, distributed space networks incorporating AI. This involves micro-segmentation, strong multi-factor authentication for all interactions with AI systems (both human and machine-to-machine), least privilege access controls and continuous monitoring of AI components and their communications, adapted for the latency and bandwidth constraints of space.

Developing space-specific security solutions for AI-specific risks, vulnerabilities and attack vectors is vital for consistent risk assessment, threat modelling and information sharing across the industry and international partners. For example, creating high-fidelity digital twins of space systems and their supporting ground infrastructure, including AI components, allows for realistic simulation, testing of AI resilience against cyberattacks, validation of defence mechanisms and training of response personnel in a safe, virtualised environment before deployment or during operations.

Developing secure, reliable and efficient mechanisms for remotely patching AI software and updating AI models on operational satellites is paramount. This includes robust authentication of update packages, integrity checks, rollback capabilities in case of failed updates and methods optimised for low-bandwidth, high-latency communication links.

In May 2025, the European Space Agency took an important step in ensuring the continued security of its critical infrastructure with the inauguration of a new Cyber Security Operations Centre (C-SOC) at its European Space Operations Centre in Germany.

In May 2025, the European Space Agency took an important step in ensuring the continued security of its critical infrastructure with the inauguration of a new Cyber Security Operations Centre (C-SOC) at its European Space Operations Centre in Germany.

Policymakers, international bodies and industry consortia must collaborate to develop and promote dedicated cybersecurity frameworks, technical standards and best practices specifically for AI in space. This includes guidelines for AI safety, security assurance, ethical considerations and data governance.

In addition, establishing trusted channels and mechanisms for sharing information on AI-related cyber threats, vulnerabilities, incidents and mitigation techniques among space operators, government agencies and international partners is crucial for collective defence and rapid response.

While AI enables greater autonomy, mission-critical decisions, particularly those with irreversible or high-impact consequences, must incorporate appropriate levels of human judgment and oversight. Designing robust human-machine interfaces, clear protocols for intervention and ensuring that human operators maintain situational awareness and ultimate authority are key to managing the risks associated with highly autonomous AI systems in space.

AI technology supports the Perseverance Rover’s navigation across Mars, improving accuracy in unfamiliar terrain, helps it to autonomously plan and schedule tasks and supports its scientific mission to explore Mars. AI systems such as those used for Perseverance can be very sensitive and unauthorised modification (tampering) of data before or during AI processing could lead to flawed analyses, incorrect decisions and even mission failures.

AI technology supports the Perseverance Rover’s navigation across Mars, improving accuracy in unfamiliar terrain, helps it to autonomously plan and schedule tasks and supports its scientific mission to explore Mars. AI systems such as those used for Perseverance can be very sensitive and unauthorised modification (tampering) of data before or during AI processing could lead to flawed analyses, incorrect decisions and even mission failures.

Securing the future of AI in space

Policymakers, international bodies and industry consortia must collaborate to develop and promote dedicated cybersecurity frameworks, technical standards and best practices specifically for AI in space

The ascent of Artificial Intelligence as an indispensable force in space exploration and operations presents a transformative opportunity. Yet, as this article has illuminated, it concurrently ushers in a new epoch of complex cybersecurity challenges. The stakes are undeniably high, encompassing the integrity of critical global infrastructure, the success of ambitious deep-space missions and the safety of future human endeavours beyond Earth. Addressing these AI-specific vulnerabilities is not merely an option but an urgent imperative. This demands proactive engagement and a nuanced understanding of the unique, often unforgiving, space context.Securing this AI-driven frontier is not a task for isolated solutions or piecemeal fixes. Instead, it necessitates cultivating a robust, multi-layered and adaptable security posture, which must seamlessly integrate cutting-edge technological defences. These include inherently secure AI architectures, advanced threat detection and resilient data pipelines, all working in concert with rigorous operational processes and forward-thinking policy frameworks. Only through such a holistic strategy can we hope to outpace the evolving threat landscape and build genuine trust in the autonomous systems that will increasingly define our presence in space.

Therefore, the path forward demands a concerted and collaborative global effort. Researchers must continue to innovate in areas like explainable AI and verifiable security. Industry leaders need to champion secure-by-design principles throughout the AI lifecycle.

Adopting Zero Trust Architecture principles, which assume no implicit trust and continuously verifying every user, device, application and data flow, is essential for securing complex, distributed space networks incorporating AI.

Adopting Zero Trust Architecture principles, which assume no implicit trust and continuously verifying every user, device, application and data flow, is essential for securing complex, distributed space networks incorporating AI.

Simultaneously, policymakers, working with international bodies, must strive to establish clear standards, ethical guidelines and cooperative mechanisms for AI governance in space. The responsible stewardship of AI in this critical domain is paramount. By working together to build secure, resilient and trustworthy AI, we lay the foundation upon which humanity can confidently build its future among the stars, ensuring that our expansion into the cosmos is both ambitious and secure.

About the author

Prof Sylvester Kaczmarek is Founder & CEO of OrbiSky, where he specialises in the integration of artificial intelligence, robotics, cybersecurity, and edge computing in aerospace applications. His expertise includes architecting and leading the development of secure AI/machine learning capabilities and advancing cislunar robotic intelligence systems. Read more at SylvesterKaczmarek.com